Shutterstock - creative ai editing tools

increasing engagement by 20x

the opportunity

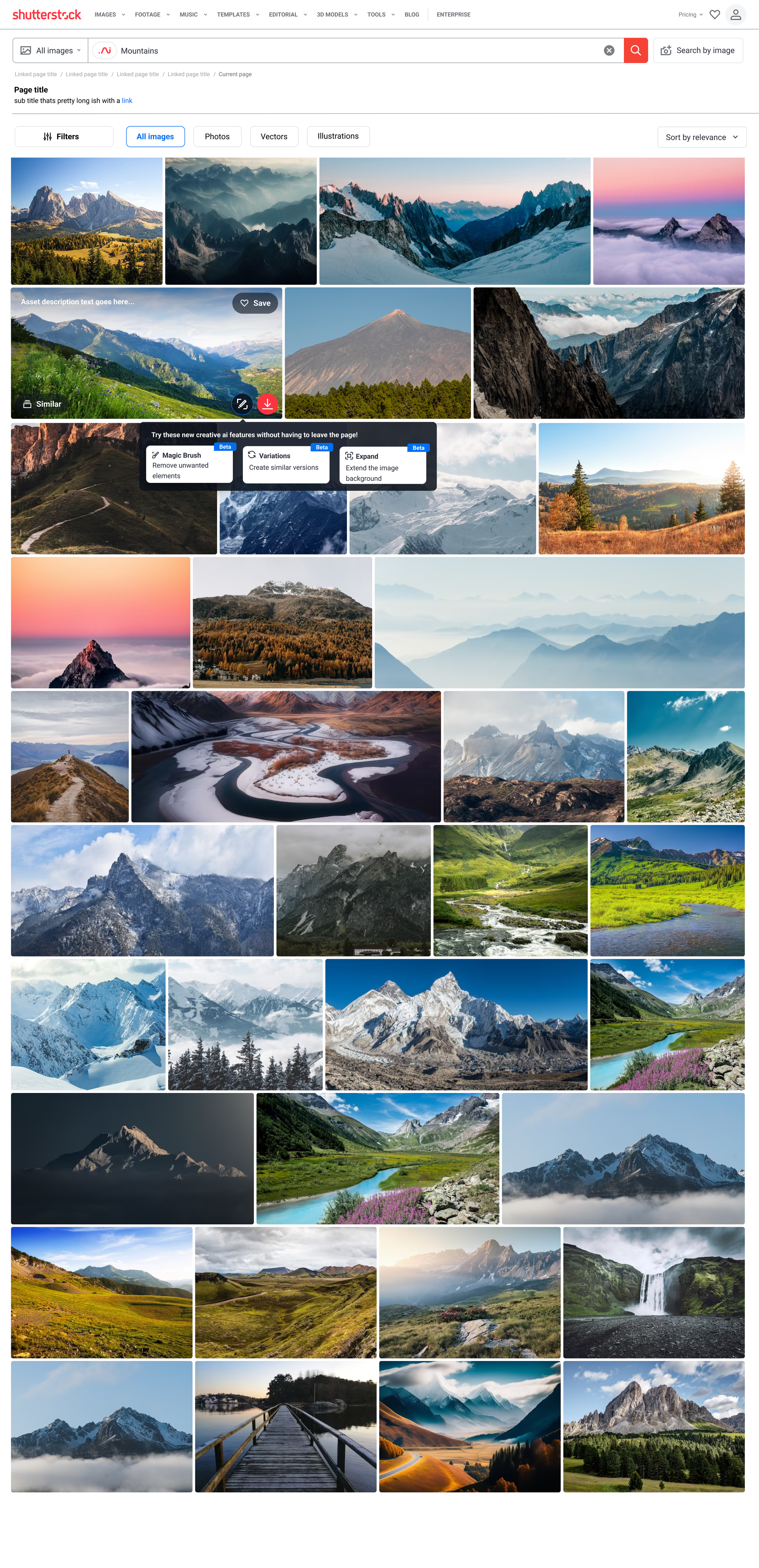

Shutterstock was developing a suite of Generative AI editing features for the new suite of creative tools. The beta launch failed to generate the anticipated level of engagement. With our hard launch date approaching we needed to address the following:

How do we create awareness and drive engagement to our new tools.

How do we begin to better integrate the tools in a more contextual manner that will help us drive conversion.

my role & the team

My Responsibilities

Design Strategy

Executive Stakeholder Management

Design Leadership

Product Strategy

Product Roadmapping

Ideation

Leadership Team

Senior Director of Product, Acquisition

Director of Product, Retention

Senior Director of Product, AI Tools

Director of Engineering, Acquisition

Director of Engineering, Retention

Director of Product Marketing

Senior Director of Product Design (me)

Project Team

3 Product Managers

2 Product Designers

Data Analyst

5 Engineers

1 Quality Assurance Engineer

ideation

We gathered key stakeholders and cross functional teams to participate in an ideation session to quickly generate a backlog of ideas we could begin to test. The initial goal was to increase engagement with the editing suite from 2% to 20%.

LucidSpark board from our ideation workshop

prioritization

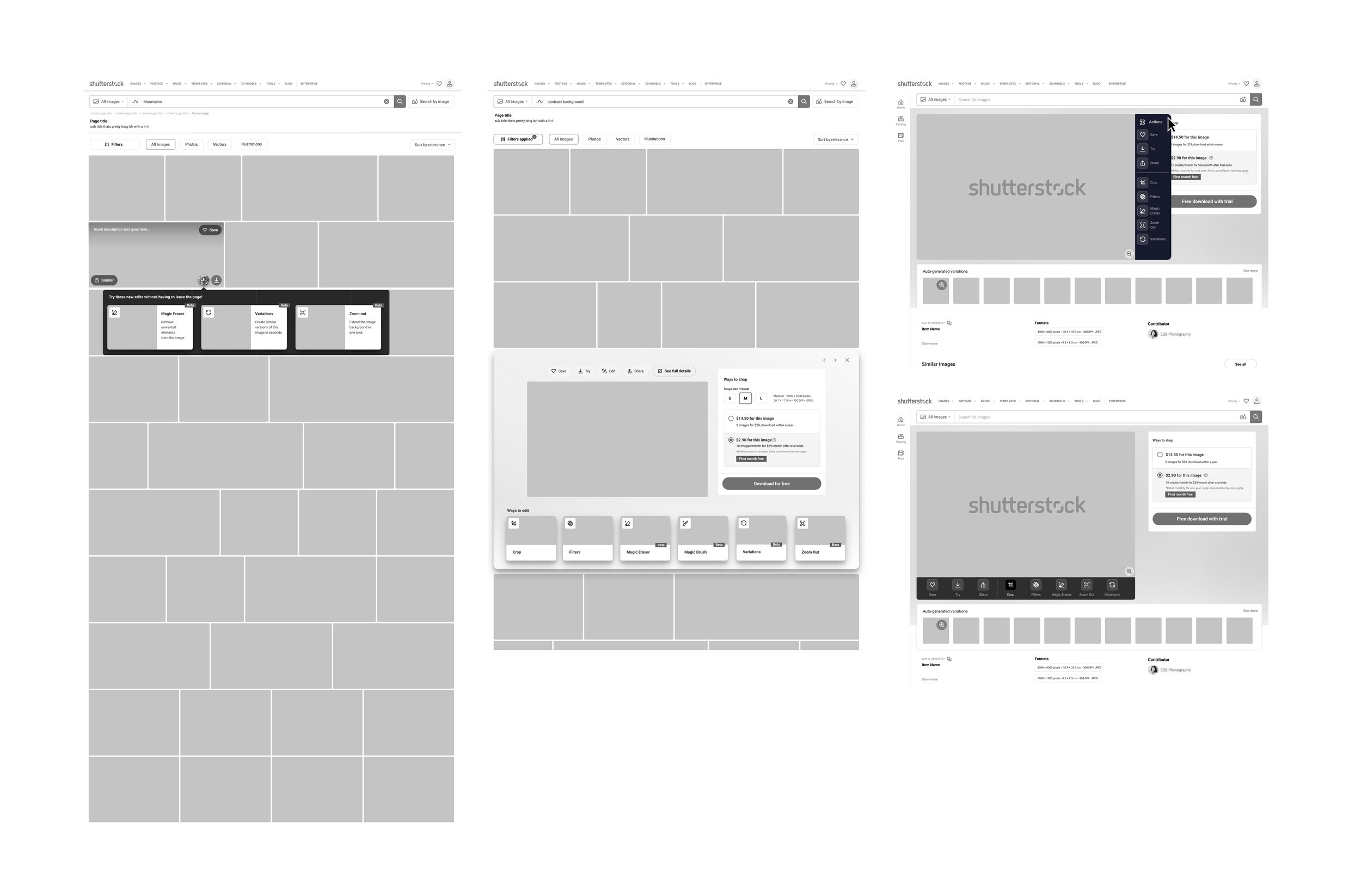

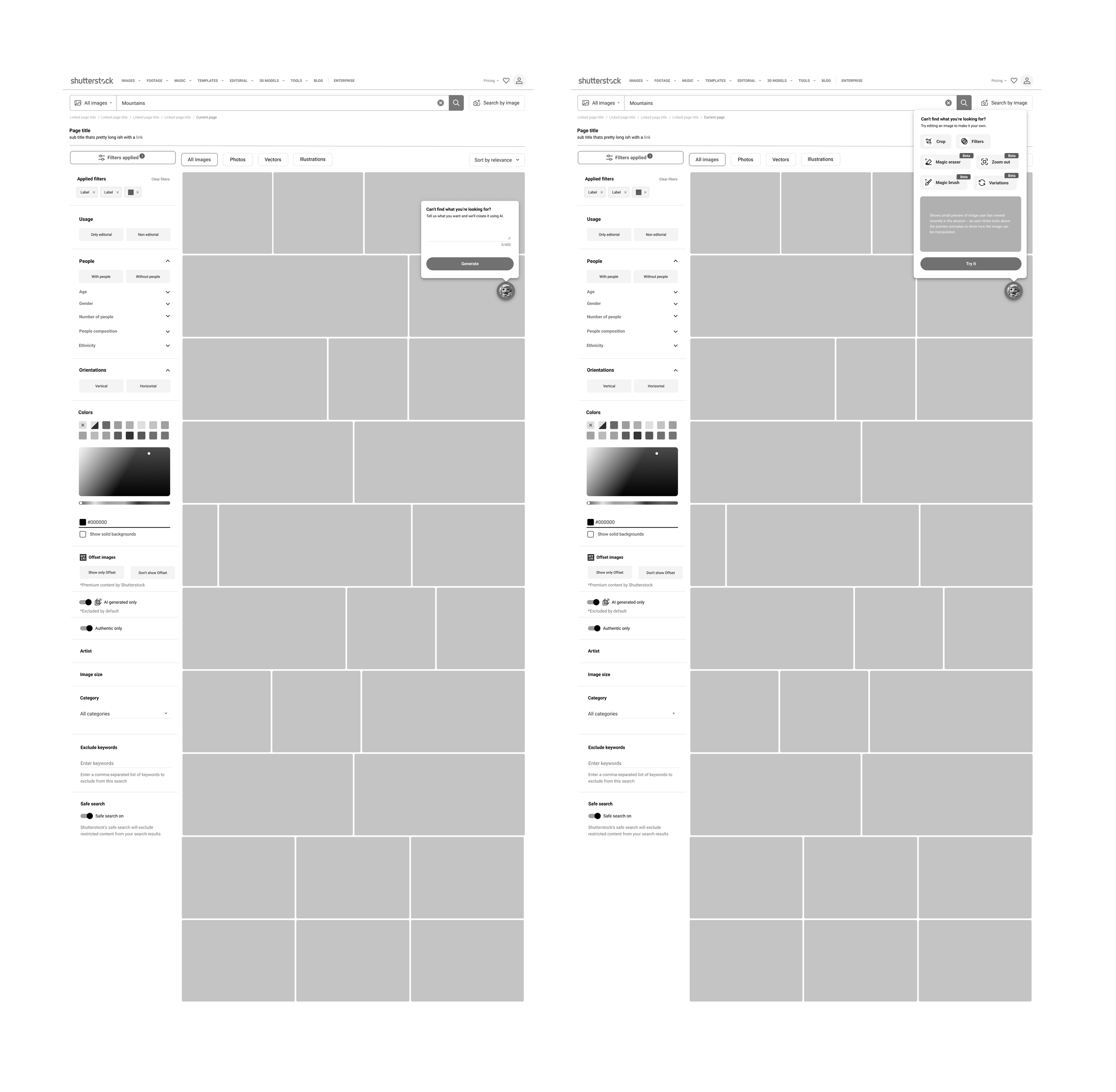

Based on site traffic, we decided to focus on two areas of our funnel, the Search Results Page (SRP) and the Asset Detail Page (ADP). We also made the decision to prioritize low effort small iterative tests to identify the best areas of opportunity. We then started to look at the ideas generated in our brainstorming session and began to prioritize, and move to mid-fidelity, the ideas we felt best met our prioritization criteria.

pivot

As we started to rollout our list of tests we encountered some technical limitations on our ADP which led to significant delays in deployment. We quickly pivoted and focused our efforts on the SRP page, which didn’t present the same technical hurdles. This development also allowed us to reprioritize some other ideas that we have moved to our backlog that focused on improving session drop-off and bounce rates.

phase 01 results

After running the test for 16 days, and reaching statistical significance, we noticed a jump from 2% engagement rate for our AI tools to 23%. We did notice a corresponding decline of 1.8% to overall conversion so we needed to dig in deeper to understand what was the cause of that decline.

Our Next Steps:

Identify cause of overall conversion impact.

Deploy tests on our ADP page to see if we could achieve similar engagement goals without negative impact on conversion.

Deploy tests to impact bounce rate and SRP drop off.